In this activity, grayscale images will be enhanced by manipulating their histogram which is the graylevel probabilit function or PDF when normalized. Histogram can be modified by having the cumulative distribution function (CDF) of the image and a desired CDF. The image was modified by backprojecting the grayscale values of the desired CDF.

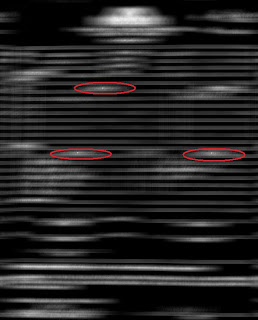

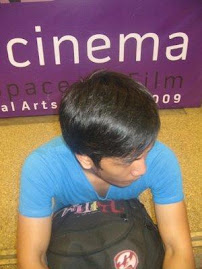

A grayscale image with poor contrast was downloaded from the internet. Then the grayscale histogram of the image was obtained using Scilab.

grays = gray_ imread('gray_image.jpg'); //read from grayscale file

//compute and plot the histogram

j=1;

pix = [];

for i = 0:255

[x,y]=find(grays==i);

pix(j)=length(x);

j=j+1;

end

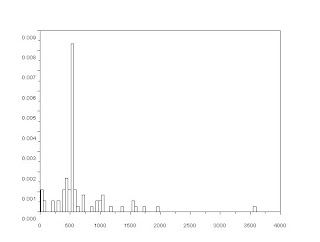

The grayscale image was show above along with its grayscale histogram. We then determine the CDF of the image from its PDF. The CDF is given by

where p1(r) is the PDF of the image. The CDF of the grayscale image above was shown at the left side below.

where p1(r) is the PDF of the image. The CDF of the grayscale image above was shown at the left side below.

We are going to remap the grayscales of the image in a way that the resulting CDF of the reconstructed image will look like our desired CDF. The CDF of a uniform distribution is a straight increasing line. If we wanted our image to have a uniform distribution of gray values, we will use a straight line, shown above right as our desired CDF.

The process of backprojection was illustrated below.

Each pixel value has a CDF value. This value was traced in the desired CDF. The pixel value will then be replaced by the new pixel value in the desired CDF.

Each pixel value has a CDF value. This value was traced in the desired CDF. The pixel value will then be replaced by the new pixel value in the desired CDF.

b = pix;

c = cumsum(b);

f = c/max(c);

x = 0:255;

imsize = size(grays);

dCDF = x;

for i = 1:imsize(1)

for j = 1:imsize(2)

ind= find(x == grays(i, j));

grays(i, j) = f(index);

The resulting image was shown below.

The transformed image shows a significant increase in contrast. This image is now called a histogram equalized image.To have more idea about the image, we displayed its grayscale histogram and its CDF.

The transformed image shows a significant increase in contrast. This image is now called a histogram equalized image.To have more idea about the image, we displayed its grayscale histogram and its CDF.

Compared with the original histogram, the histogram of the transformed image showed a more uniform distribution of grayvalues. As with our goal, the CDF of transformed image looked like just the same as the desired CDF.

----------------------------------------------------------------------------------------------------------------

We have manipulated the histogram of a grayscale image with the use of a linear desired CDF. However, the human eye has a nonlinear reponse. So we can mimic the human eye response by using a nonlinear CDF. We can use a tanh function as our desired CDF which is nonlinear. Its plot was shown below.

x = 0:255;

tanhf = tanh(16.*(x-128)./255);

tanhf = (tanhf - min(tanh))./2;

Again, the backprojection was done. The CDF value of the pixel value was traced along the CDF value of the desired CDF. The pixel value in the desired CDF will be the new pixel value.

b = pix;

c = cumsum(b);

f = c/max(c);

x = 0:255;

imsize = size(grays);

for i = 1:imsize(1)

for j = 1:imsize(2)

ind = find(x == grays(i, j));

pixel1 = f(index);

pixel2 = find(tanhf<= pixel1); grays(i, j) = (pixel2(max(pixel2)));

The transformed image using a non-linear CDF was shown below.

Clearly, the transformed image is not as good as the image transformed using linear CDF. The image becomes darker with very poor contrast. We display its grayscale histogram and its CDF.

Clearly, the transformed image is not as good as the image transformed using linear CDF. The image becomes darker with very poor contrast. We display its grayscale histogram and its CDF.

We can see from the grayscale histogram the distribution of gray values at a certain region at the center. The CDF shows that we were able to transformed the grayscale image base from the desired CDF. However, we were not able to enhance the contrast of the gray image.

I give myself a grade of 9 for this activity. I was able to easily understand the backprojection of pixels however, I had a hard time implementing it in a code. Also, I was able to show the effect of having a linear and non-linear CDF desirable for transforming a grayscale image. In this activity, I learned how to qualify an image with just looking on its grayscale histogram. The more uniform the distribution of the grayscale values, the better the contrast of the grayscale image. I acknowledged the help of Gilbert, who tirelessly helped me debug my code and waited for me until I gathered all my results.

A grayscale image with poor contrast was downloaded from the internet. Then the grayscale histogram of the image was obtained using Scilab.

grays = gray_ imread('gray_image.jpg'); //read from grayscale file

//compute and plot the histogram

j=1;

pix = [];

for i = 0:255

[x,y]=find(grays==i);

pix(j)=length(x);

j=j+1;

end

The grayscale image was show above along with its grayscale histogram. We then determine the CDF of the image from its PDF. The CDF is given by

where p1(r) is the PDF of the image. The CDF of the grayscale image above was shown at the left side below.

where p1(r) is the PDF of the image. The CDF of the grayscale image above was shown at the left side below.

We are going to remap the grayscales of the image in a way that the resulting CDF of the reconstructed image will look like our desired CDF. The CDF of a uniform distribution is a straight increasing line. If we wanted our image to have a uniform distribution of gray values, we will use a straight line, shown above right as our desired CDF.

The process of backprojection was illustrated below.

Each pixel value has a CDF value. This value was traced in the desired CDF. The pixel value will then be replaced by the new pixel value in the desired CDF.

Each pixel value has a CDF value. This value was traced in the desired CDF. The pixel value will then be replaced by the new pixel value in the desired CDF.b = pix;

c = cumsum(b);

f = c/max(c);

x = 0:255;

imsize = size(grays);

dCDF = x;

for i = 1:imsize(1)

for j = 1:imsize(2)

ind= find(x == grays(i, j));

grays(i, j) = f(index);

The resulting image was shown below.

The transformed image shows a significant increase in contrast. This image is now called a histogram equalized image.To have more idea about the image, we displayed its grayscale histogram and its CDF.

The transformed image shows a significant increase in contrast. This image is now called a histogram equalized image.To have more idea about the image, we displayed its grayscale histogram and its CDF.

Compared with the original histogram, the histogram of the transformed image showed a more uniform distribution of grayvalues. As with our goal, the CDF of transformed image looked like just the same as the desired CDF.

----------------------------------------------------------------------------------------------------------------

We have manipulated the histogram of a grayscale image with the use of a linear desired CDF. However, the human eye has a nonlinear reponse. So we can mimic the human eye response by using a nonlinear CDF. We can use a tanh function as our desired CDF which is nonlinear. Its plot was shown below.

x = 0:255;

tanhf = tanh(16.*(x-128)./255);

tanhf = (tanhf - min(tanh))./2;

Again, the backprojection was done. The CDF value of the pixel value was traced along the CDF value of the desired CDF. The pixel value in the desired CDF will be the new pixel value.

b = pix;

c = cumsum(b);

f = c/max(c);

x = 0:255;

imsize = size(grays);

for i = 1:imsize(1)

for j = 1:imsize(2)

ind = find(x == grays(i, j));

pixel1 = f(index);

pixel2 = find(tanhf<= pixel1); grays(i, j) = (pixel2(max(pixel2)));

The transformed image using a non-linear CDF was shown below.

Clearly, the transformed image is not as good as the image transformed using linear CDF. The image becomes darker with very poor contrast. We display its grayscale histogram and its CDF.

Clearly, the transformed image is not as good as the image transformed using linear CDF. The image becomes darker with very poor contrast. We display its grayscale histogram and its CDF.

We can see from the grayscale histogram the distribution of gray values at a certain region at the center. The CDF shows that we were able to transformed the grayscale image base from the desired CDF. However, we were not able to enhance the contrast of the gray image.

I give myself a grade of 9 for this activity. I was able to easily understand the backprojection of pixels however, I had a hard time implementing it in a code. Also, I was able to show the effect of having a linear and non-linear CDF desirable for transforming a grayscale image. In this activity, I learned how to qualify an image with just looking on its grayscale histogram. The more uniform the distribution of the grayscale values, the better the contrast of the grayscale image. I acknowledged the help of Gilbert, who tirelessly helped me debug my code and waited for me until I gathered all my results.